One of the biggest misconceptions with cameras–specifically those found on smartphones—is that the more megapixels they have, the better they are.

A symptom of this misconception can be identified in what is known as the “megapixel war” where hardware manufacturers constantly try to one-up each other with higher and higher megapixel counts in order to lure potential customers to spend more on a product that is perceived to have a “better camera” because it has more megapixels.

Unfortunately, this is rarely the case.

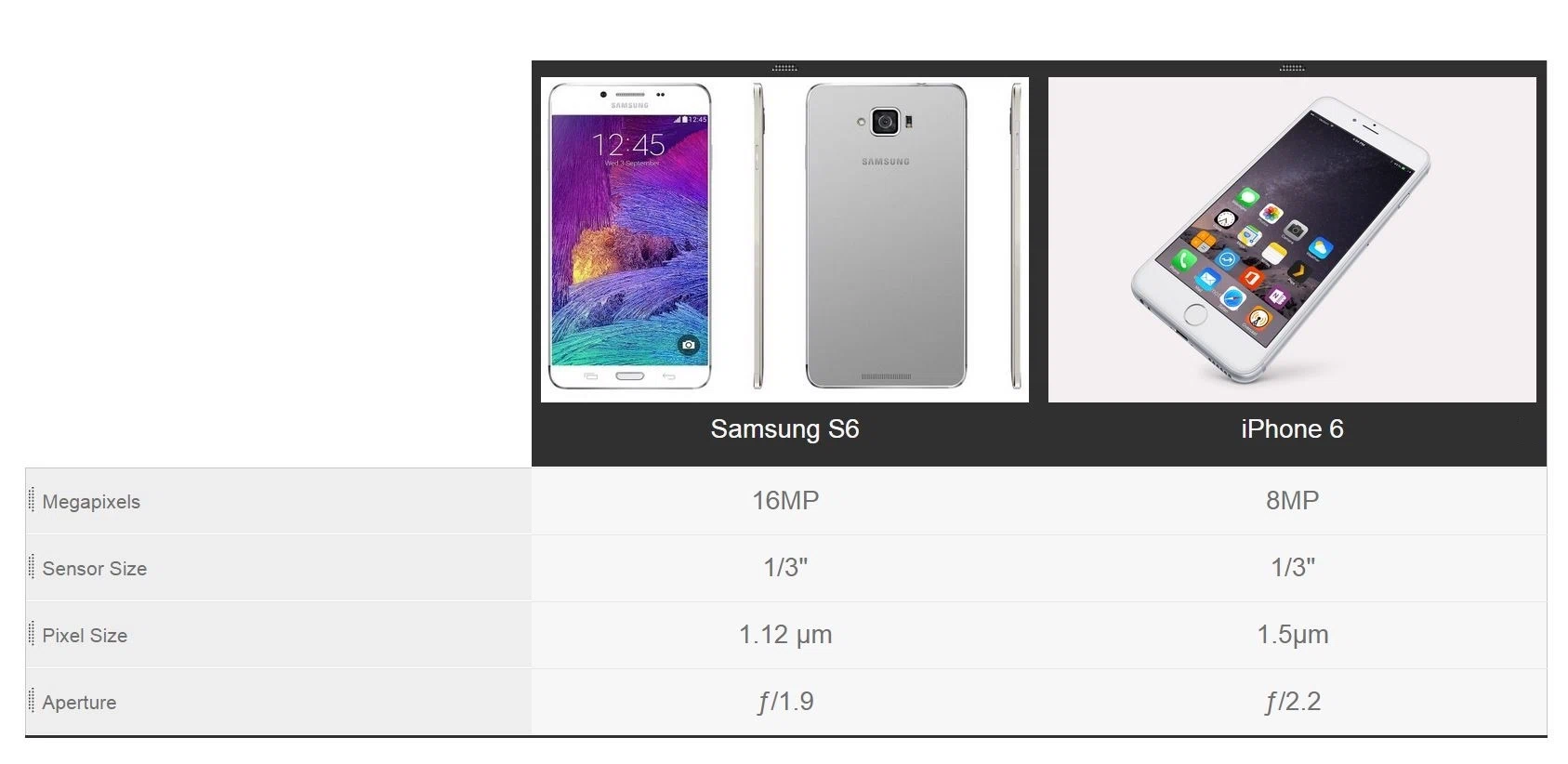

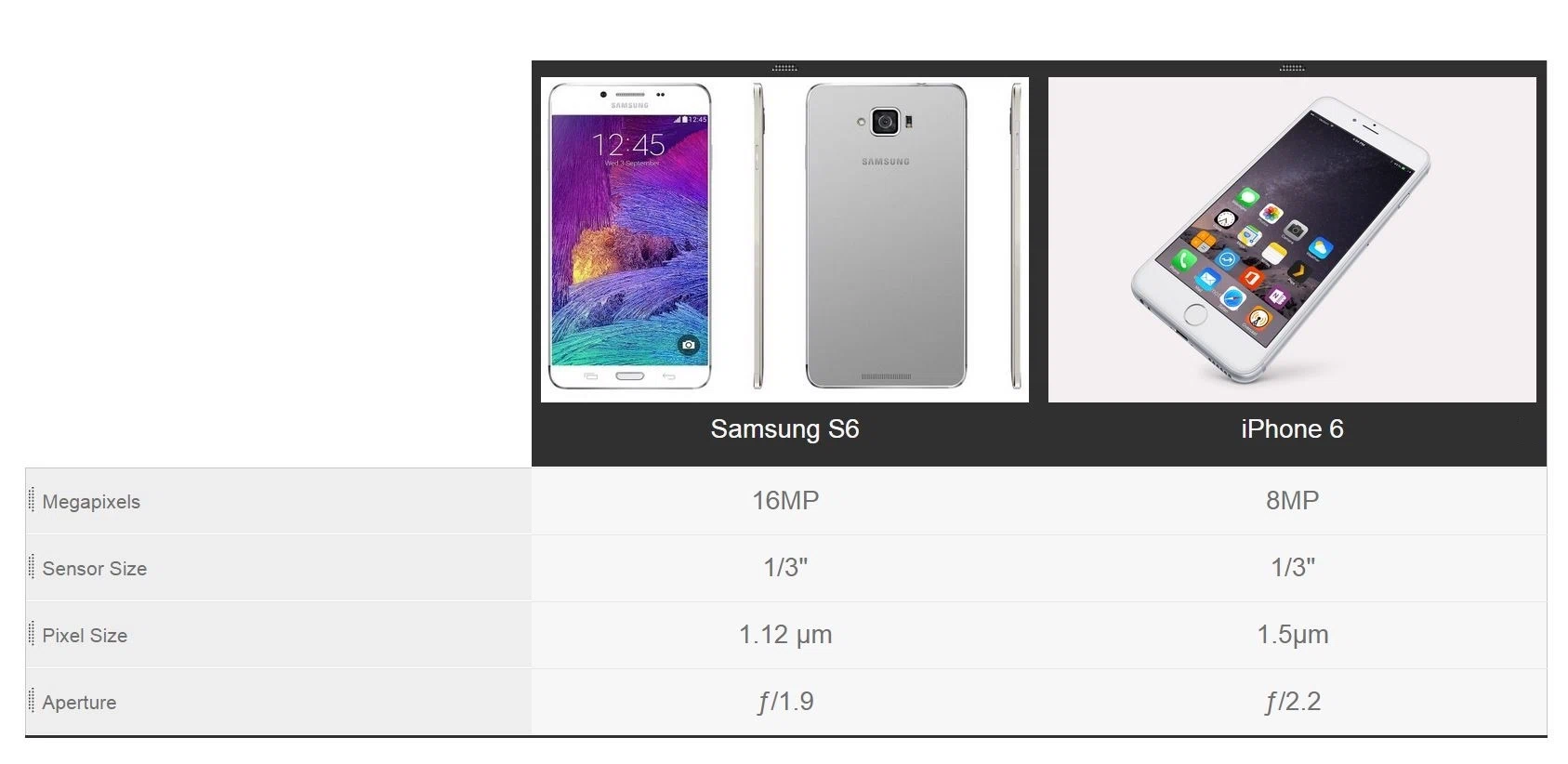

Case in point: the iPhone 6 has 8 megapixels compared to the 16 megapixels found on the Galaxy S6, yet both phones have comparable picture quality, with marginal differences depending on the lighting conditions and shooting mode.

So what gives? Why isn’t the camera on the Samsung Galaxy S6 at least twice as good as the one the iPhone 6?

To understand what’s going on, it’s important to break down the different components of a camera to see why picture quality isn’t just a question of megapixels.

Here’s a brief rundown of all the parts in a smartphone camera with a brief explanation of how each component works:

Sensor

The sensor is often considered to be one of the most important parts of a digital camera—after all, it is the part that captures the image that you’re trying to take. Sensors are composed of photodetectors that correspond to the number of pixels on the camera, so, for example, an 8 megapixel camera has exactly 8 million photodetectors on the sensor. When you you take a picture, the light from your snapshot hits all the 8 million photodetectors, and the resulting analog data is then converted through the sensor into a digital signal that is then passed on to the imaging software that determines how the photo will look like.

One general rule of thumb is that, the larger the sensor, the better the photograph. This is because the size of the sensor determines how much light can be captured. The more light, the more detail, the better the picture.

Other benefits of having a larger sensor are an increased field of view, which allows you to take more wide-angle photos, as well having an easier time of keeping your subject in focus while blurring the rest of the image.

Pixel Size

Not all pixels are created equal, which helps explain why having more megapixels doesn’t automatically translate into a better picture. In the previous example, we used a smartphone camera sensor that had 8 million photodetectors. It is very possible to cram 8 million more photodetectors onto the sensor—and consequently 8 million pixels—giving us double the resolution without changing the size of the sensor.

Doubling the number of pixels doesn’t come without its fair share of consequences, however. For one, each photodetector now receives 50% less light because they’re now half their previous size, meaning the camera has to work twice as hard to process the input from the photodetectors to create the final image. This issue is most apparent when shooting in low-light conditions where the camera has trouble determining the accurate brightness levels for each pixel, resulting in a random variation of color and brightness, known in photography as noise.

Having more megapixels only really comes into play when you’re trying to print large photographs (who does that anymore?) or if you do a lot of cropping after your photoshoots.

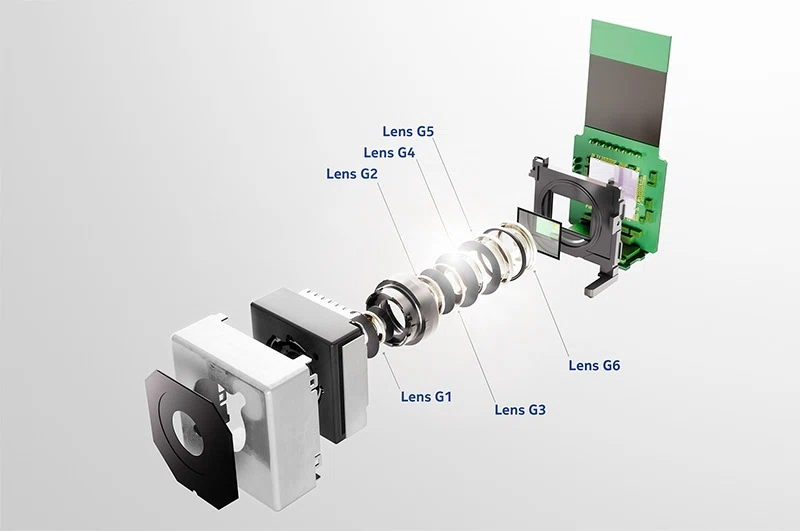

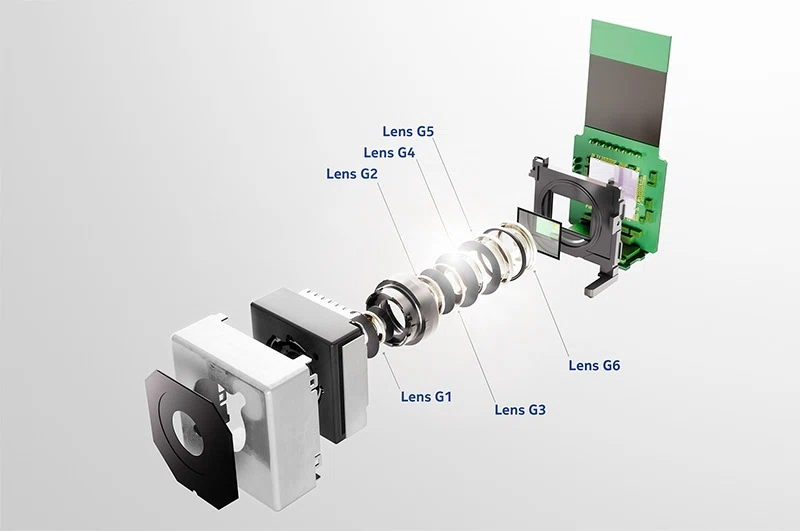

Lens

If we think of the sensor as the “bucket” that the light is captured onto, then the lens is the funnel that makes sure the water—or light—is evenly spread across the bucket without splashing all over the place (known in photography as overexposure).

The lens on a smartphone camera consists of many lenses of either glass or plastic that focus and shape the incoming light to fit the sensor underneath. The last lens is responsible for focusing the image, which prevents blurring and allows you to keep the subject (the things that you’re taking a picture of) to remain in focus.

One key difference between smartphone camera lens and other point-and-shoot cameras and DSLRs is that the aperture (the hole that determines how much light is passed to the sensor) remains fixed. This means that you’ll have to rely on post-processing software (effects) and ISO to adjust the light properties of the image you’re taking.

Another difference is the absence of optical zoom. Due to their compact nature, it’s hard to have a retractable lens on a smartphone camera, so what you’re left with is digital zoom. Unlike optical zoom, which maintains overall picture quality by taking advantage of the entire sensor, digital zoom essentially “crops” a certain portion of the image and blows it up, which is blurrier and more pixelated than what you would get with an optical zoom.

Algorithms and Software

A key part of converting the analog signal (the light that enters the smartphone’s camera sensor) to a digital signal (the data that results in a photo in your phone) has to do with the on-board softare and algorithms that manipulate this data into the final image.

The software used to process the digital signal into a digital photo is crucial in determining how the resulting photo will look like, which is why there are a bevy of trade secrets and patents regarding photo-processing algorithms and software.

In addition to providing electronic image stabiilzation, a smartphone camera’s software also determines the ISO (the camera’s sensitivity to light), white balance, and vibrance (the intensity of muted colors) in a given photograph.

The bottomline regarding a smartphone camera’s algorithms and software is this: even if you have the best sensor and lens, these won’t mean much if you don’t have the software to take advantage of their performance. Similarly, no amount of software will overcome the handicap of a bad sensor or lens. You need both.

Comparing iPhone 6 to Galaxy S6

Coming back to the comparison between the iPhone 6 and the Galaxy S6, it’s clear that there’s more to great pictures than simply megapixels.

Let’s look at the camera specs on the iPhone 6 and Galaxy S6 side-by-side with the sensor and lens in mind.

Other than for megapixel size, we can see that the iPhone is very evenly matched with the Galaxy S6. Both phones have a sensor that is exactly the same size, although the Galaxy S6 has a slightly higher aperture, which helps accomodate more light to its doubled pixels. By contrast, the iPhone 6 has 25% larger pixels than the Samsung S6, allowing it to capture more light to each of its pixels.

In spite of having double the pixels as the iPhone 6, the Samsung Galaxy S6 is neck and neck with the iPhone when it comes to photo quality. The reason for this is that photo quality is ultimately determined by the sensor, the lens, pixel size, and the camera’s software—not by the number of megapixels it has.

Lens diagram image courtesy of TechSpot